How We Use Python to Sync Billions of Emails

• 8 min read

Developing an email sync engine is hard. Python makes it it easy (well, easier).

At Nylas, our love for all things Python is no secret. Our email sync engine — responsible for syncing and serving more than 10 billion emails for customers — is built entirely in Python.

Why? The answer is simple: Python is standard, reliable and (best of all for a startup like ours) boring. It’s a no-frills solution enabling us to provide a flexible and stable API for developers around the globe.

At PyBay 2017, I gave a talk on why Python’s “boringness” is inherently advantageous to work with. For companies building complex products, Python-driven solutions produce the most stable foundation for innovation.

Email is hard

Email protocols are notoriously fickle, requiring extensive documentation just to implement simple syncing to a SaaS app with one protocol. If a developer wants their email implementation to work with a wide variety of email standards, they have a lot of issues to consider (for example, getting Exchange, Exchange ActiveSync, and Gmail IMAP protocols to play nice together) in addition to parsing, encoding, authentication and more. The list of potential problems is extensive and there is almost never a simple solution.

-1.jpg)

These complications are simply the inevitable baggage that accompany a 50-year-old technology like email. Standards change, new and complicated technologies are introduced, but legacy protocols must always be considered.

The Challenges

When Nylas first got its start, we had to consider how we’d build a sync engine API. There were, essentially, two options: 1) Store minimal data and act as a translation layer between two platforms or 2) Mirror the contents of mailboxes and serve as many requests as we could directly.

The first option would be cheaper, but it would complicate our ability to deliver reliability to organizations that depend on uptime. So, we went with option two, even though it was more expensive. We did this by sharding databases and using a semi-monolithic architecture. This architecture positions our services across different fleets of machines, but they all share the same underlying code and models for easier integration.

-2.jpg)

This architecture positioned us to better tackle bigger challenges, like threading emails, syncing tags and folders and more. The end result: the Nylas Sync Engine. The Nylas Sync Engine is open source and provides a RESTful API that enables developers to integrate messaging into their applications. It has more than 90,000 lines of Python code, including tests and migrations, and helps to wrangle an ecosystem of protocols, protocol offshoots, parsing, encoding and much, much more.

In a Tech Stack, Boring is Good

To build out our architecture, we had to use an extensible programming language like Python and its many libraries. For example, we use flanker, a parsing library, extensively to help facilitate email deliverability. We also use Flask, Gevent, SQLAlchemy, and pytest in addition to other tools like HAproxy, nginx, gunicorn, MySQL, ProxySQL, Ansible, Redis and more. As far as stacks go, it appears as if we made some fairly bland choices. We did, and that is by design.

We chose to run with boring tools for a simple reason: we’re a small company and we can dedicate only so many resources towards driving innovative developments. In fact, part of our design philosophy is descended from Dan McKinley’s essay, “Choose Boring Technology,” where he suggests each company has limited capacity for innovation before exhausting itself out of business. This concept drove our early decisions at Nylas and informs our central API philosophy, which is to enable our clients to build one integration instead of many. This pushed us towards battle-hardened technologies we know, we don’t have to worry about and which enabled us to do more elsewhere.

For example, we use MySQL to manage our database. We decided MySQL was the right choice early on because we knew it well, a great deal of potential DBAs knew it well and we needed to save that innovation energy for other issues. To be sure, relying on MySQL meant we had a lot of growing pains (see our blog post on Growing up with MySQL), but we could overcome those issues with the smart application of ProxySQL, horizontal sharding and other techniques.

Knowing our strengths in MySQL, and how we could later modify it with ProxySQL and other helped to simplify our database into smaller, more manageable pieces while enjoying almost no downtime and without having to completely redesign our database. In fact, we use MySQL to easily record and replay all the changes to a mailbox sync on our transaction tables through a little bit of magic provided by ProxySQL and SQLAlchemy. This powers how we sync, our webhooks, our streaming API and more.

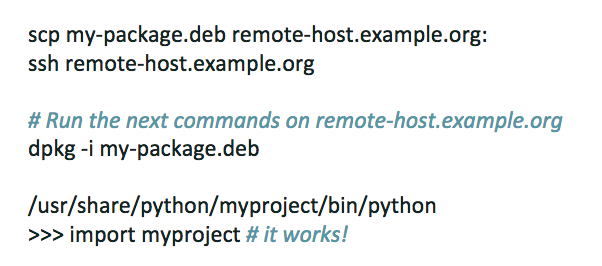

With so many dependencies, we use a Python virtualenv wrapped up in a Debian package manage to deploy our application. Specifically, the tool we use is called dh-virtualenv and it enables us to use Debian’s package manager, dpkg, to deploy dependencies and vet artifacts before pushing them to Amazon S3. As McQueen notes, a simple deploy script winds up looking like this:

With these tools in place, we enjoy a reliable tech stack that doesn’t set out to reinvent the wheel. It simply helps to sync and deliver email while meeting everyone’s expectations on uptime, scale and stability.

Syncing and Checking

We use Python on multicore machines across our fleets. This means we have to run multiple processes in order to get the most out of those multiple cores. For this, we use gevent, a coroutine library, to sync about 100 accounts on a single process. This saves us a lot on memory and OS scheduling. Here’s what a process looks like:

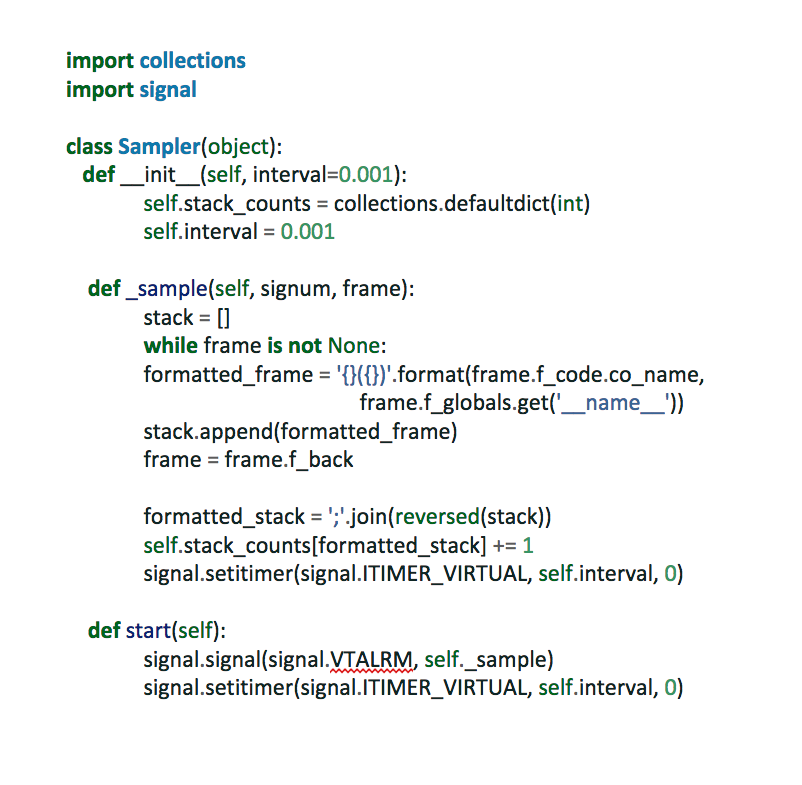

Each green box is a gevent greenlet. Greenlet is a powerful little Python tool that allow us to make minute control flows, such as having a greenlet managing a Gmail sync flow down to greenlets managing trash, calendar, contact syncs and more. To monitor all this, we use a special greenlet running across all flows that sends an event log if it doesn’t run in a determined amount of time. When this happens, we run a sampling profiler that samples and records the application call stack to identify where the application is getting hung up for analysis. In Python, a sampler will look a bit like this:

These samples can be fed into a flamegraph to show us what a process is doing, how the CPU is being used and what greenlet is holding it up.

What We’re Working on Next

But as any organization knows, the fun doesn’t stop at overcoming challenges. Over the course of the next few years, we plan to invest more in the Python ecosystem. For example, we’re looking towards mypy to help alleviate management as our code grows in complexity. In particular, we’re using mypy to do type checking as a linter. It’s an incremental project, but we’re excited to have the complexity-reducing powers of mypy onboard.

We’re also going to start migrating towards Python 3. Finally, we’re looking at moving our transaction log to a Kafka event backbone. This should enable us to move towards a microservices-based architecture, giving us more flexibility by not requiring every service to talk directly to the database.

For us, Python is in nearly everything we do because of its simplicity, its diversity of libraries and its ability to work well with servers. For curious developers, there is a lot more to learn about how Python can simplify code, or form the foundation of a new application. And, thanks to its robust and productive community, learning how to execute on those possibilities can be fairly simple. That community is one of the main reasons why we use Python so extensively and why we’re looking forward to seeing what else that community can do and how we can contribute to it as well. For us, Python is standard, extensible, time-tested and, best of all for any startup — boring. We can’t wait to see where it goes from here.

Watch my full presentation here:

Nylas CTO and Co-founder Christine Spang started working on free software via the Debian project when she was 15. She previously worked at Ksplice and loves to trad climb.